Theater director, Reid Farrington envisions a brand new multi-media theatrical production about the art of acting through the lens of the extraordinary life and career of Marlon Brando, who believed that human actors can and may be replaced by digital photo-real avatars.

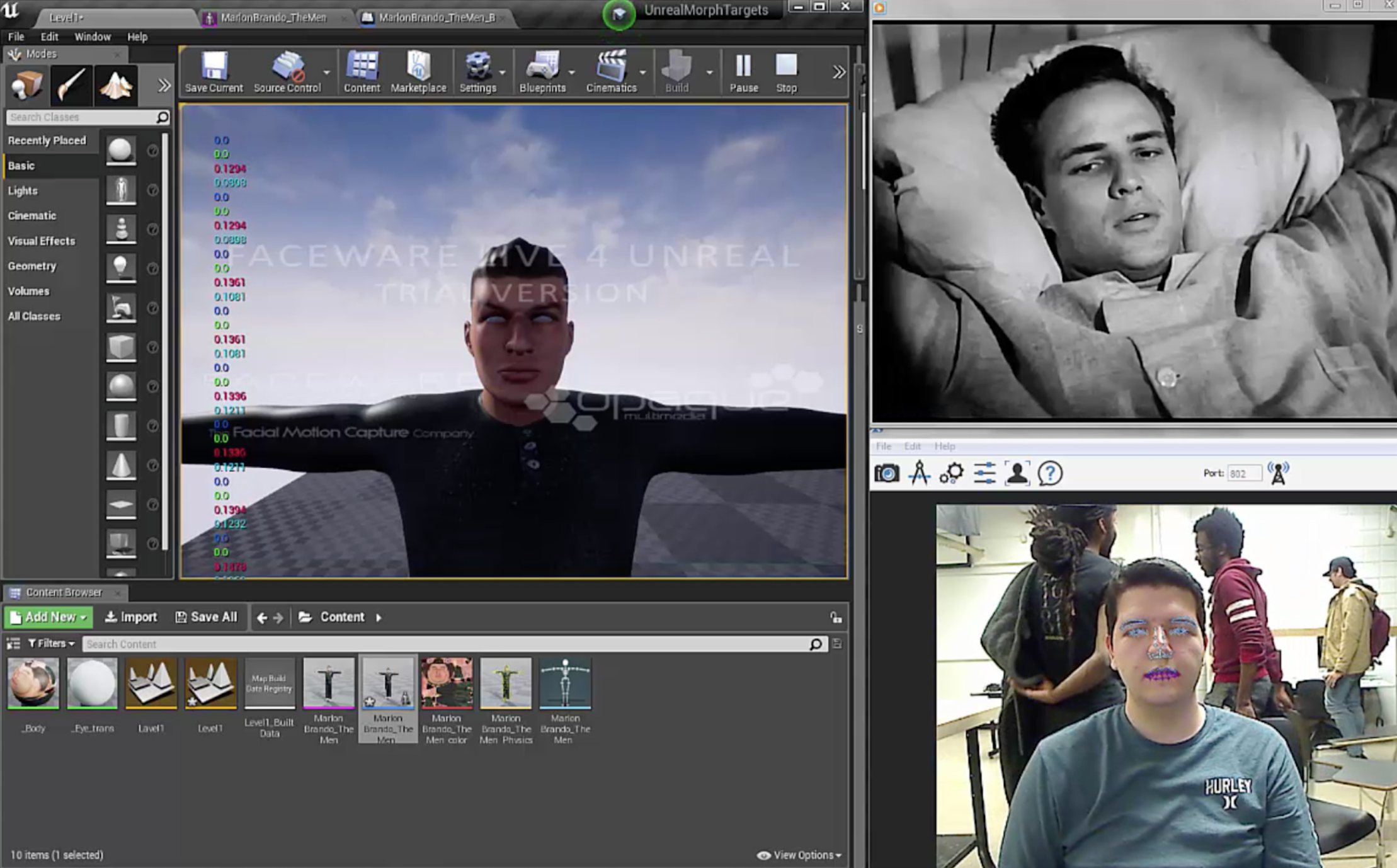

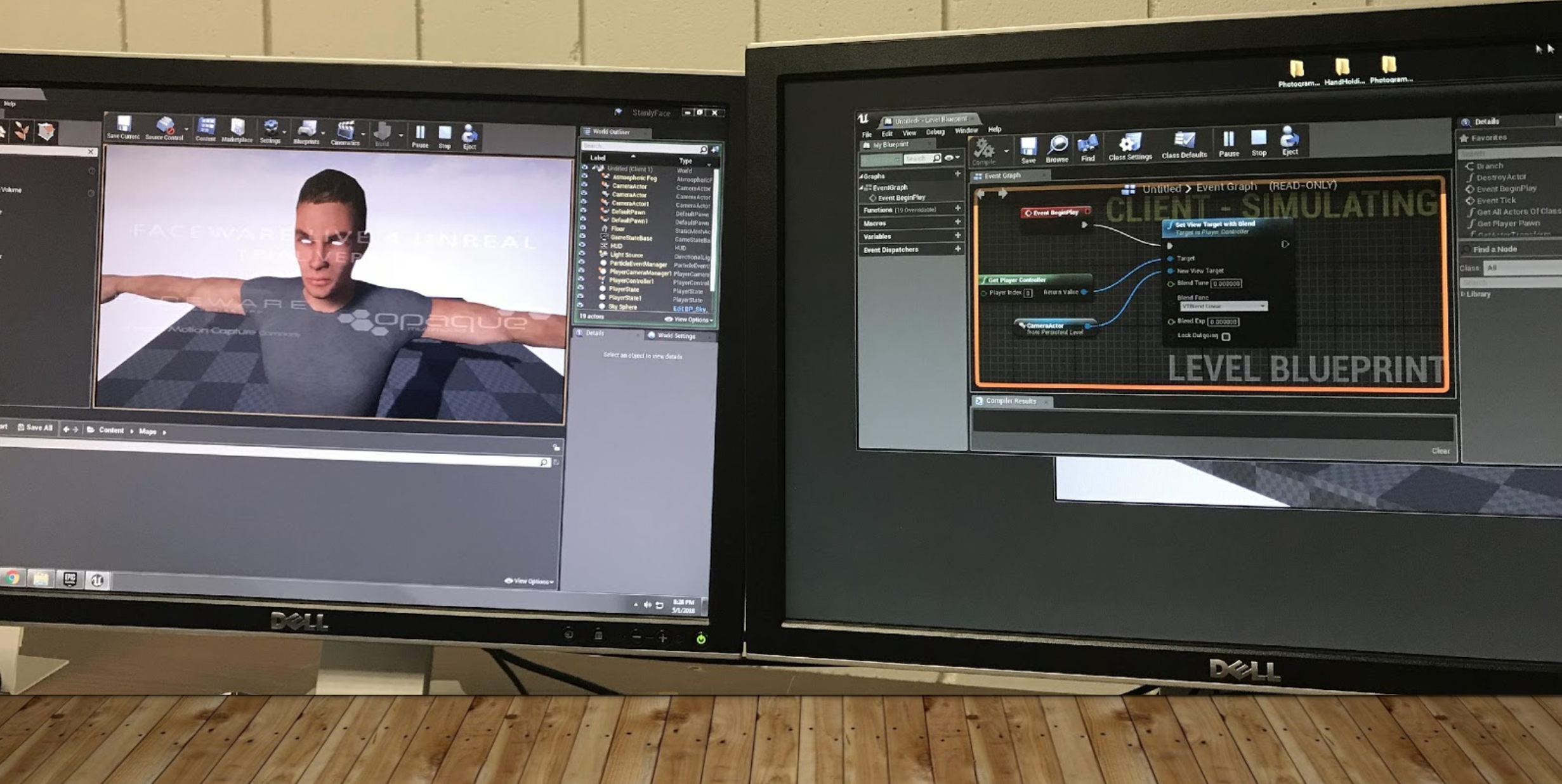

In this video, I am using Unreal to interpret values from the Faceware extension to manipulate the facial gestures of a Maya generated 3D model of Marlon Brando in his movie Last Tango In Paris.

MODELING THE THEATER IN MAYA AND UNREAL

The team documented every element that composes the Voorhees Theater

Each asset was 3D modeled in Maya, and exported as .fbx files

These models were then imported into a master scene within Unreal

Using actual blueprints of the theater, the elements were assembled together to scale

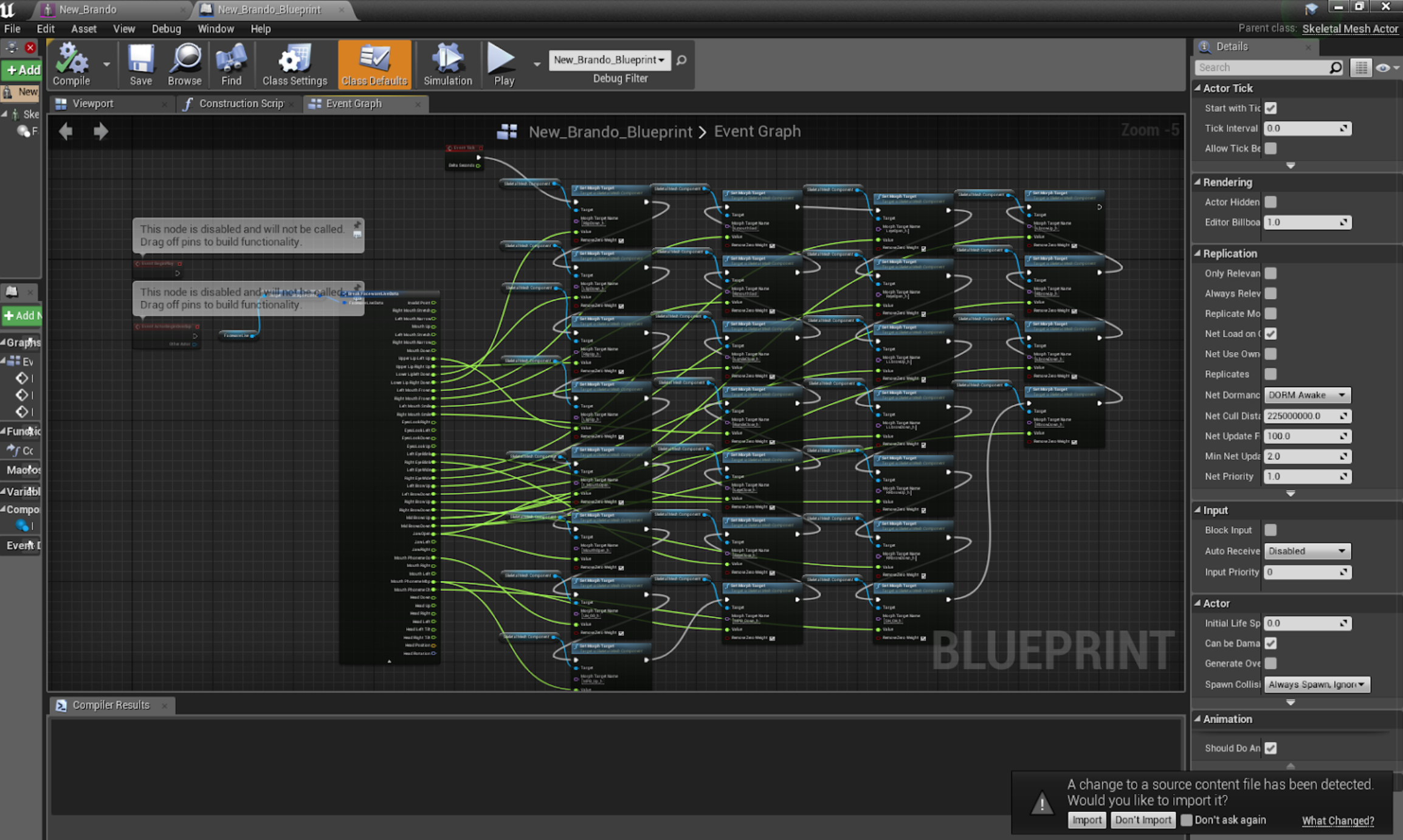

BLENDSHAPES

Within Maya, the Brando digital avatar is composed of numerous Blendshapes that are associated with specific morph targets

Within Unreal, the Faceware Live plug-in tracks the movement of a person’s face, and the data is then used to control the morph targets associated with the avatar’s facial gestures.

More Blendshapes were created to achieve more nuanced expressions

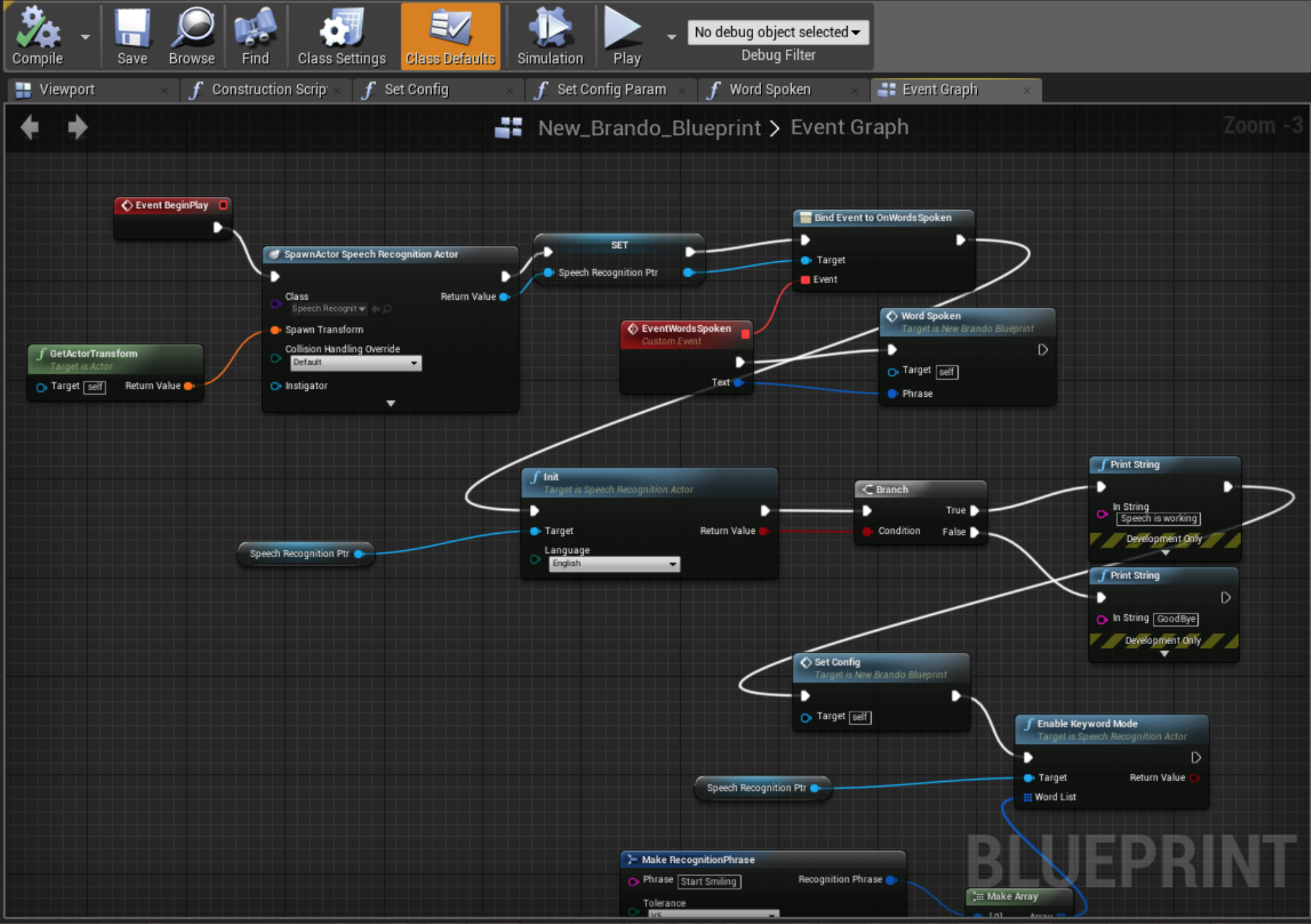

OSC: Open Sound Control

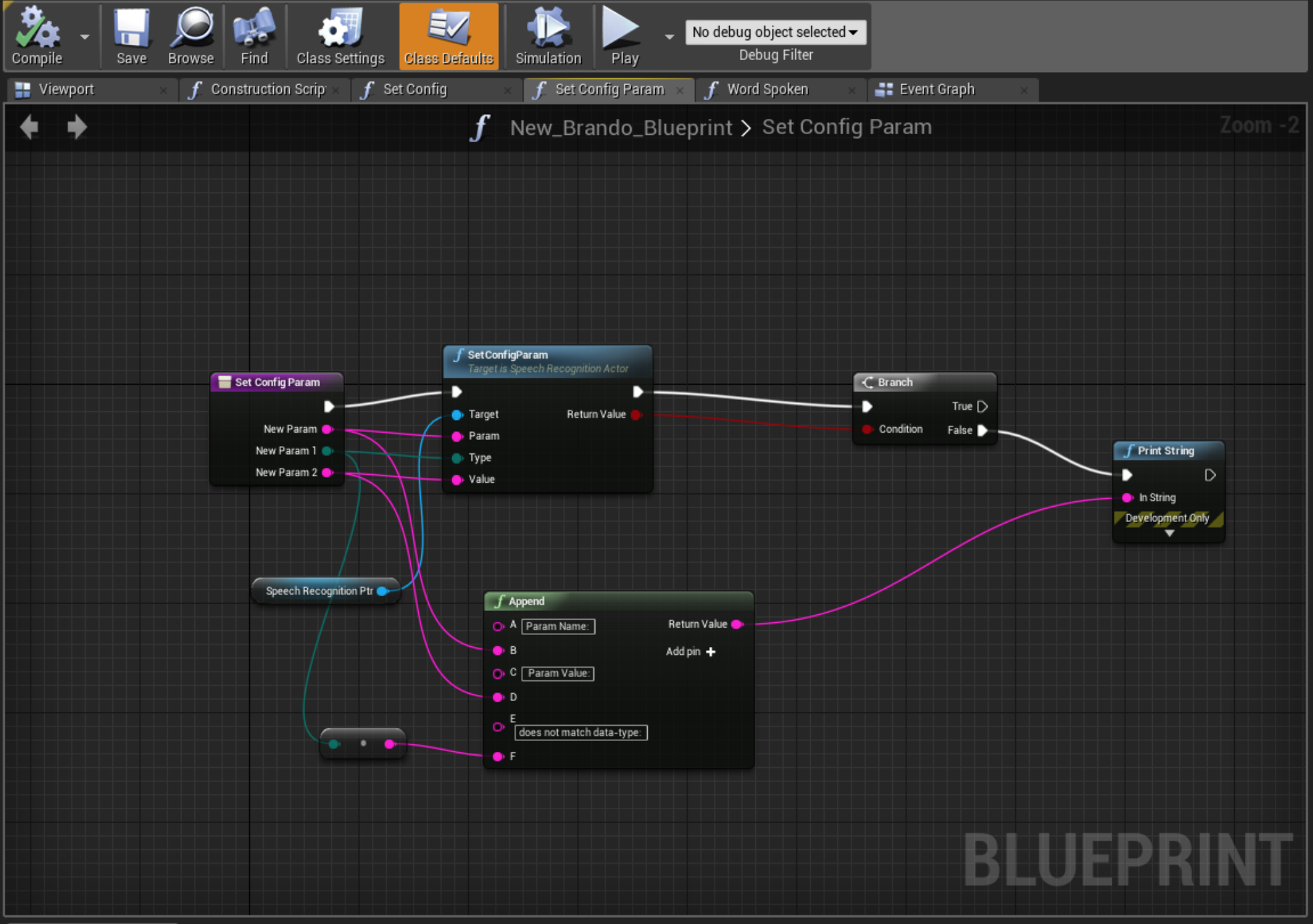

Within Unreal, we used an additional speech recognition plugin to establish control using voice commands.

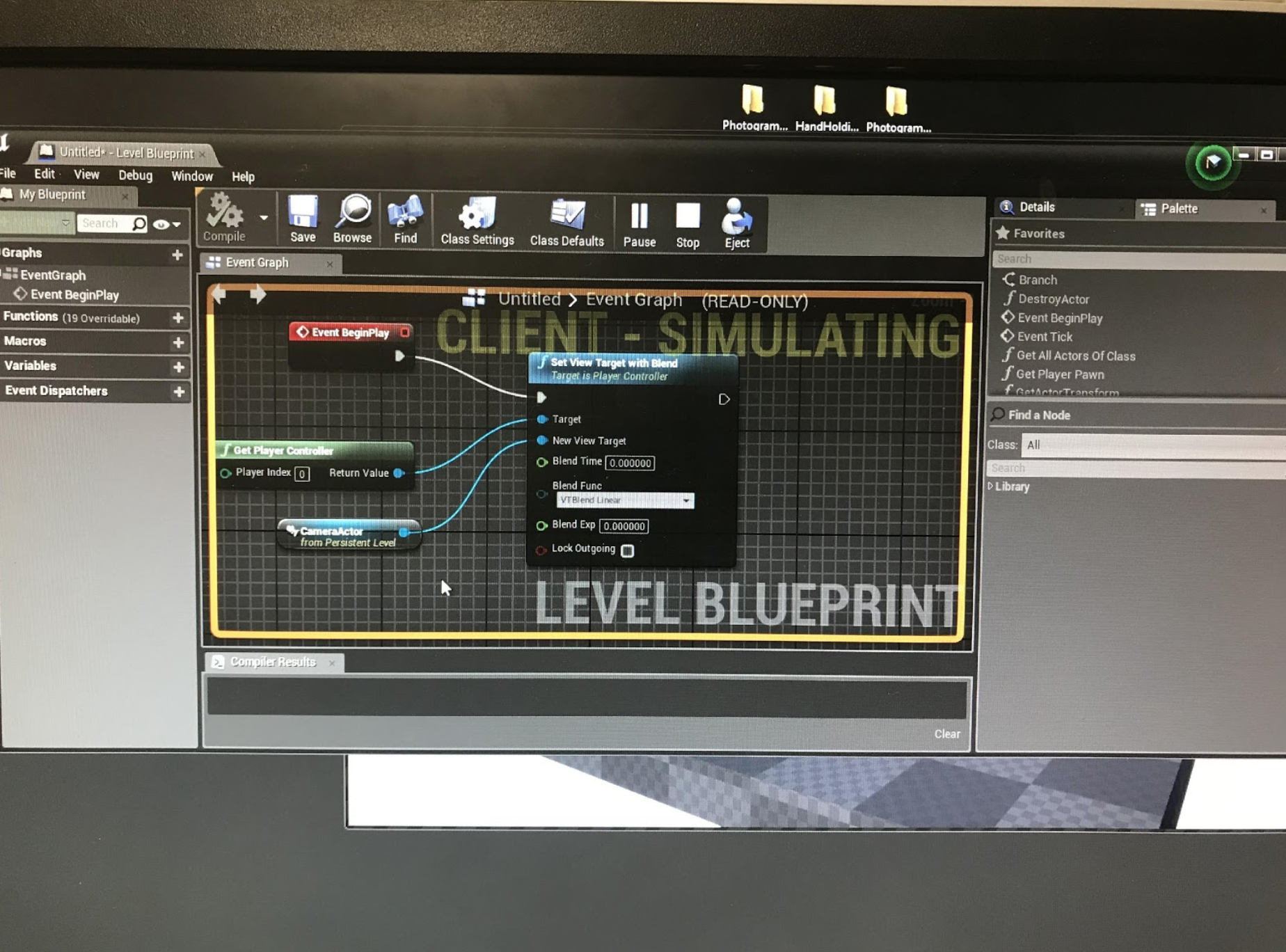

Re-create the blueprint from the plugin and refactor it to work with morph targets

Blend communication between speech recognition and the model to control expression using voice commands

Projection Mapping

To create separate camera views within Unreal to display two different perspectives

Close-up on the Brando avatar’s facial gestures

The avatar existing within the 3D modeled theater

From Unreal, the camera feed needs to be routed through Spout in order to be transmitted through the projectors via the projection mapping software Resolume