Throughout the course of the semester, I came up with a few projects that I felt could complement each other. My final has become a compilation of the following:

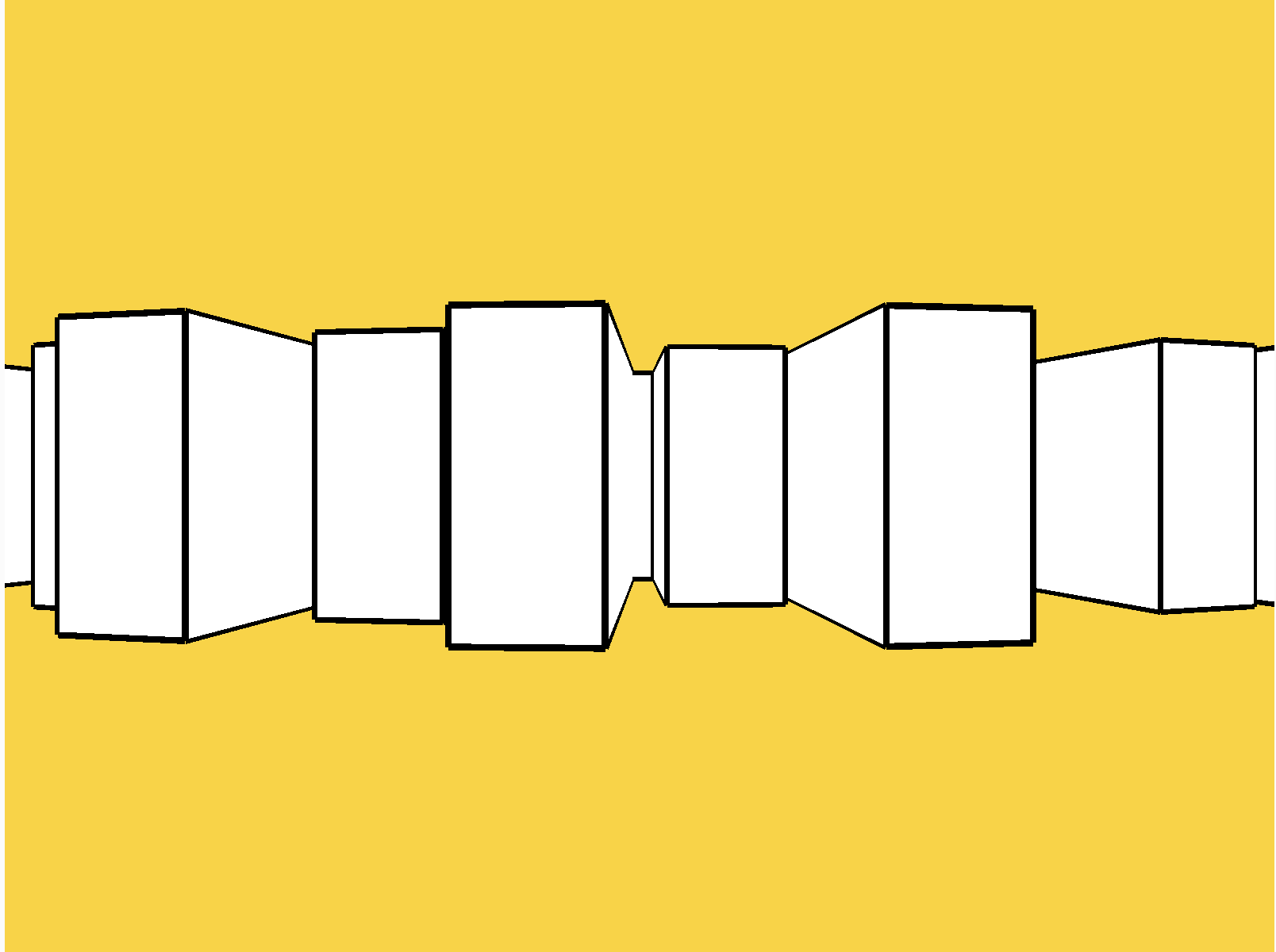

Animation, Variables: Breathing Visualization, https://editor.p5js.org/niccab/sketches/JWJ-ro0cH

3D rotation responsive to microphone

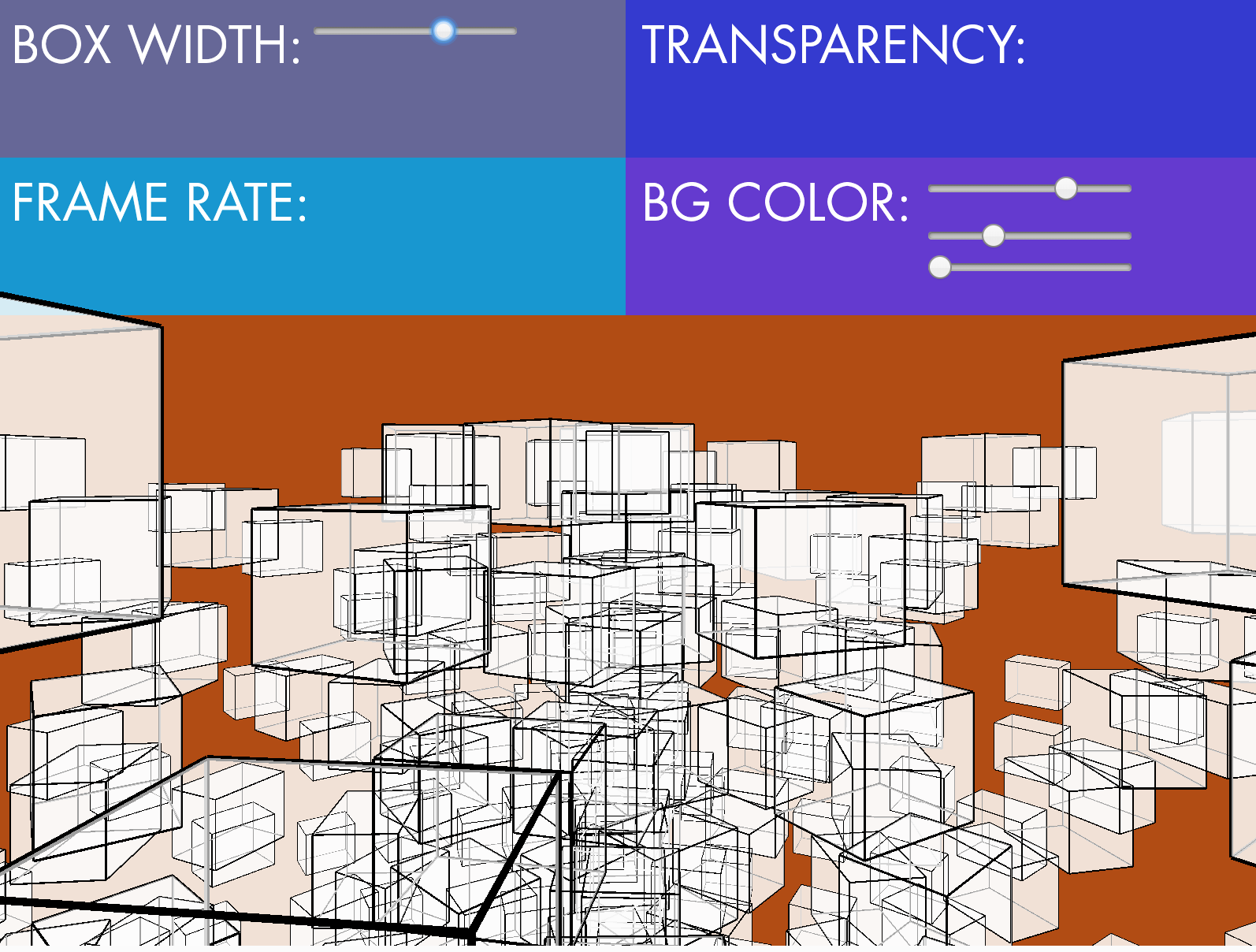

Repetition: 3D Cubes, https://editor.p5js.org/niccab/sketches/DGC0eVKoZ

Exploration of 3D space with camera angles

Pixel & Sound: Birds of Paradise, https://editor.p5js.org/niccab/sketches/utn2z7iGW

PoseNet activated sounds

A very inspirational website that has helped me generate new ideas is: http://www.generative-gestaltung.de/2/

When exploring 3D space, Allison had recommended I look into the EasyCam library: https://github.com/diwi/p5.EasyCam

After navigating through a number of examples, I wanted to try navigating through a 3D space with PoseNet.

Here’s a sketch of my exploration: https://editor.p5js.org/niccab/sketches/v4ralRUJ2

When talking to people about my project and asking around if anyone had any experience with libraries / 3D environments, Jiaxin showed me his final project. He created a 3D environment from SCRATCH without knowing that there was a camera function in WEBGL. In his final project, he calculates the angle in which the wall planes should be positioned that are dependent on face detection via ml5.js. While his project is a different approach than mine, it gave me so much insight on the math involved to simulate a 3D environment in a 2D way - I recommend checking out his very detailed blog post here: https://wp.nyu.edu/jiaxinxu/2019/12/11/icm-media-final-the-tunnel/