Here is the full video documentation of Glowing With Fire, an audio-visual projection mapped performance in the times of COVID-19.

This performance was created for LIPP.tv, the final performance project of the Live Image Processing and Performance (LIPP) class at NYU’s ITP. LIPPtv is a creative response to how code, video, networks, and art can be used to create a new experience in live performance. Each student has created their own short TV show influenced by video art, experimental animation, public access TV and more. This entire event was created remotely and is performed remotely, with students creating the website, commercials, music, and animations.

The original stream took place on Twitch on May 11th, 2020.

Please watch the full recorded program here: https://lipp.tv/

Development

For LIPPtv, I am collaborating with my roommate Heidi Lorenz, also known by her project name OCTONOMY. She will be providing the audio, and I will be using a combination of Max and madMapper to projection map our backyard. This pre-recorded performance will also be shown with Cultivated Sound, a hybrid label and collective.

Here is a skeletal version of the track, which she is still working on. We discussed a water element where she will pour water into a bowl with contact mics and distort it.

The first samples were very different from this.

Here is my are.na link for some inspiration / references.

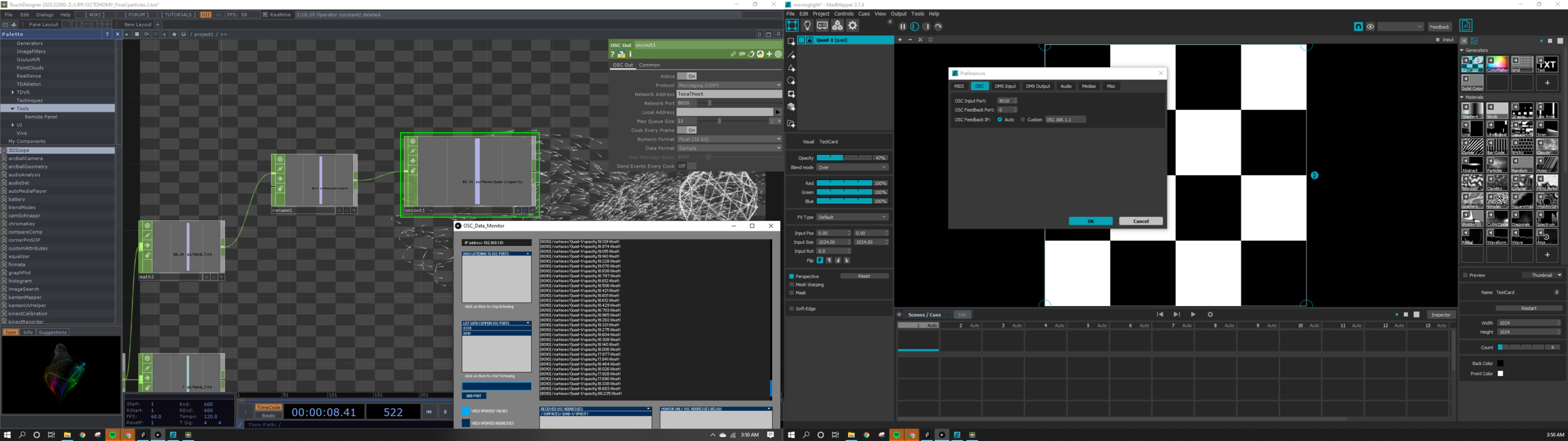

Before hearing the track go towards its new direction, I thought I could incorporate some experiments I was working on for Light & Interactivity - my gesture controlled DMX moving head lights, that also has a particle system visualization within TouchDesigner.

I knew that securing an expensive short-throw projector that doesn’t belong to me (thanks ER) and cantilevering it off the edge of a fire escape would be a huge source of anxiety…

After all this, the angle was still not at an ideal location. Back to fabricating a new attachment and remapping the layout…

References