Prompt

In teams of 2 - 4 create a design to show on Zoom that utilizes tactics of illusion to artistically engage with a concept that is rooted in the cultural or sociological or philosophical dilemmas of representation.

No specific technological tools are prescribed or required; however it must be able to be shown in class on Zoom. Suggestions of topics can be: Underrepresented stories, designs that expose the worship of the written word, histories or cultures at risk of deletion, the power structures behind our systems of representation ...

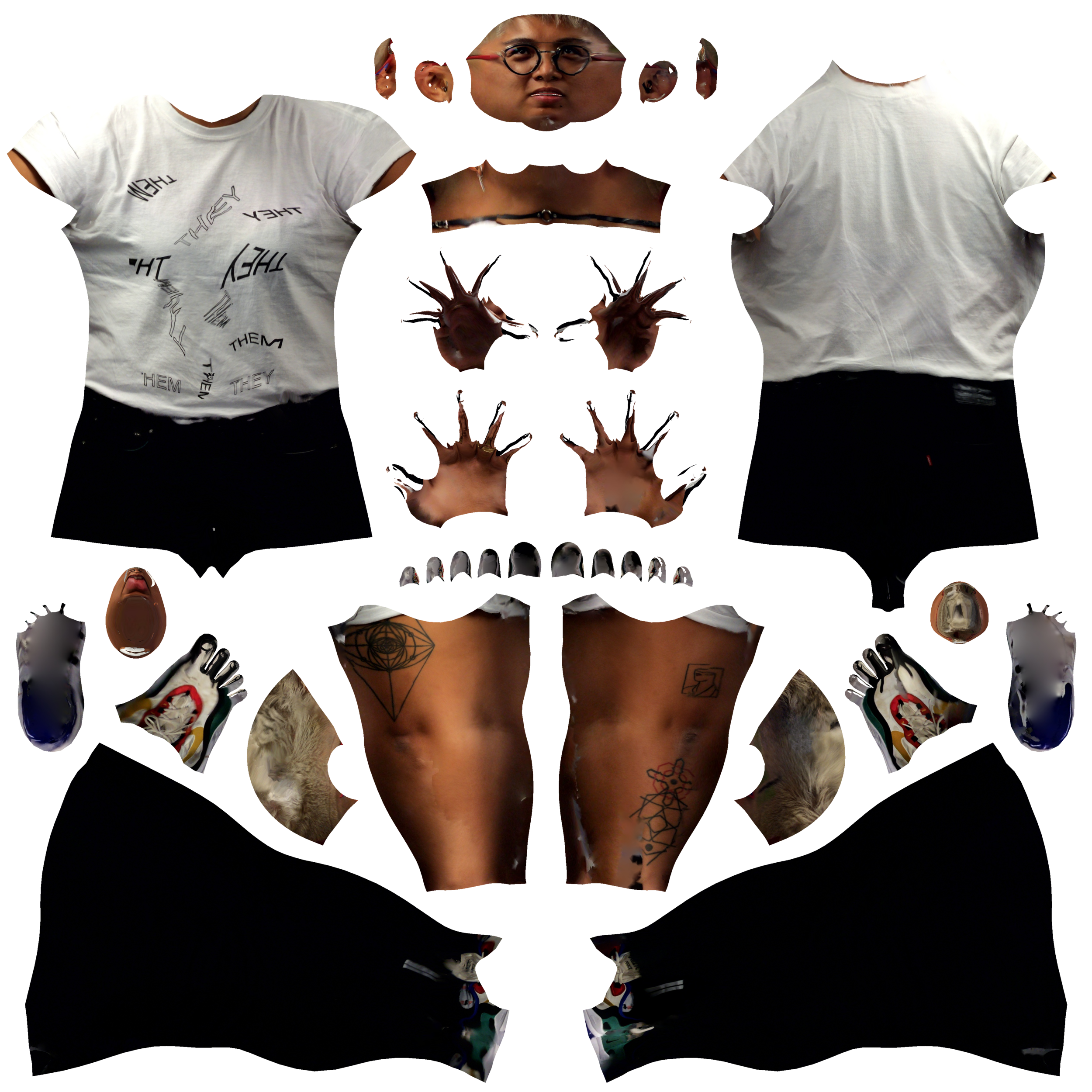

Two potential topics that I’d like to explore emerge from a previous project I did last semester for my Performative Avatar final: The Evolution of the Babaylan.

The erasure of Filipinx indigenous culture as a result of Spanish and American colonization.

The babaylan is a femme shaman of any gender, as femininity was seen as a vehicle to the spirit world.

When researching more about the babaylan, I learned that the Spanish in the 16th century demonized the babaylans to maintain the patriarchy, to enforce that women shouldn't be leaders, and that their healing practices weren't as powerful as Western medicine. Spanish colonizers actively erased indigenous rituals and beliefs, and converted half of the Philippine population to Christianity within the first 25 years of their arrival.They created the myth of the Aswang, an umbrella term for female, shape shifting creatures that feed on fetuses and the sick, to use fear as a control mechanism.

The experience of looking at Getty Images for “filipino faces” resulting in "chinese faces”

This still stirs me up, incorrectly being categorized and lumped into another Asian ethnicity that must have the most image results. It feels conflicting, knowing that it is impossible to aggregate a list of facial characteristics that make up a “filipino face” when my motherland had been colonized and is a melting pot of its adjacent countries, when I myself don’t carry features that are used to describe people from the Philippines.References:

Barbara Jane Reyes - Letters to a Young Brown Girl

Coded Bias

Algorithms of Oppression

Weapons of Math Destruction

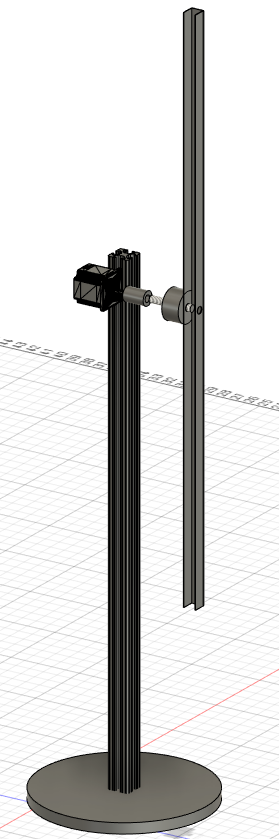

For the illusion, I would like to incorporate projection mapping in a similar vein of how Liu Bolin uses photography and painting to visually erase himself from the context of the environment.

I am still working on the visual construction of the illusion.

A third topic came to me in my social media feed on Munroe Bergdorf’s Instagram and Ashley Easter’s Twitter:

The Bible is a transcription of oral tradition with innumerable translations and interpretations, and there is no singular author to place infallible trust in. As someone who was raised Catholic, I early on felt shame for my queerness, feeling the weight of this supposedly grave sin, instilled with the fear of the concept of hell. The purposeful mistranslation in the Revised Standard Version was to appease medical professionals and culture in the 1940s who saw same-sex attraction as a mental illness.

I am still not sure of how to incorporate illusion into this, but maybe a visual reference could be Lil Nas X’s descent to hell in his video for Call Me By Your Name :)